Microsoft is asking people to help their friends and family “get off” Windows XP, in an official blog post published by the tech giant.

“As a reader of this blog, it’s unlikely you are running Windows XP on your PC. However, you may know someone who is and have even served as their tech support,” writes Microsoft’s Brandon LeBlanc. “To help, we have created a special page on Windows.com that explains what ‘end of support’ means for people still on Windows XP and their options to stay protected after support ends on April 8th.”

LeBlanc then goes on to detail two options that Windows XP users have to upgrade. They can either use the Windows Upgrade Assistant to determine whether they can upgrade their current PC to Windows 8 or Windows 8.1, or they can simply buy a new PC.

The decision to upgrade to Windows 8 or 8.1 is likely a tough choice for Windows XP users. There’s a notable amount of risk that comes with holding onto Windows XP once the April 8 “end of support” is upon us. So users of the outdated operating system must decide: Hold onto a more easily exploitable operating system, or spend hundreds of bucks for a system that has an OS that’s more secure, but significantly less liked by users, if market share numbers are any indication.

Though security experts might disagree with the former approach, the prospect of updating to Microsoft’s latest OS is a tough sell for the average Joe and Jane. Then again, there’s always Windows 7, which is the world’s most popular desktop operating system and can currently be had for around $100 on Newegg.

What do you think? Sound off in the comments below.

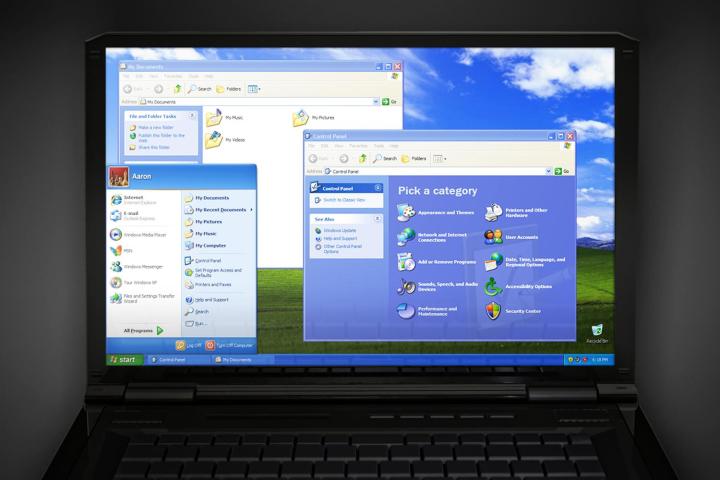

Image courtesy of Shutterstock/RealCG Animation Studio