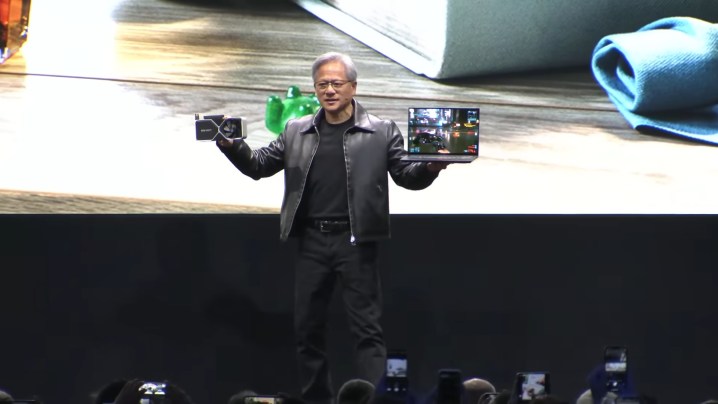

Nvidia’s CEO Jensen Huang is defending the recently-launched RTX 4060 Ti, and in particular, its 8GB of VRAM. The executive spoke about gaming and recent GPU releases in a roundtable interview with reporters at Computex 2023, where he faced questions about the limited VRAM on Nvidia’s most recent GPU.

PCWorld shared a quote in which Huang defended the 8GB of VRAM and told gamers to focus more on how that VRAM is managed: “Remember the frame buffer is not the memory of the computer — it is a cache. And how you manage the cache is a big deal. It is like any other cache. And yes, the bigger the cache is, the better. However, you’re trading off against so many things.”

The RTX 4060 Ti has been met with a lot of criticism due to its 8GB of VRAM, especially as demands for video memory have scaled up in recent PC releases such as Resident Evil 4, Hogwarts Legacy, and The Last of Us Part One. Huang’s response seems like a defense, but it doesn’t really talk about the issues with VRAM in the RTX 4060 Ti.

As Huang says, VRAM is essentially a cache. Data is moved from your storage into your system memory and finally into VRAM. The problem is that going out to system memory is remarkably slow, which is a big reason why we’ve seen major stuttering issues and crashes in games like The Last of Us Part One and Hogwarts Legacy. Huang says that managing VRAM is like “kung fu,” and although that may be true in theory, we’ve seen that management fall short in practice.

Huang also didn’t address what exactly gamers are trading off with a larger VRAM capacity, either. Perhaps power, perhaps cost, or perhaps some combination of multiple different factors; it’s hard to tell. Nvidia seems to recognize that capacity is an issue, though, and it’s releasing an RTX 4060 Ti variation with 16GB of VRAM in July. It’s the same GPU with the same performance, just with more VRAM and priced $100 higher than the base model.

Following its Computex keynote, Nvidia became the first chip designer ever to reach a value of over $1 trillion, joining only five other companies currently at that mark. It rang hollow for many gamers, however, as Nvidia largely focused on AI during its keynote, short of the new Nvidia ACE engine for generative AI in games.

In a response to PCWorld, Huang said that gamers still come first for Nvidia. “Without AI, we could not do ray tracing in real time. It was not even possible,” Huang said. “And the first AI project in our company — the number one AI focus was Deep Learning Super Sampling (DLSS). Deep learning. That is the pillar of RTX.”

It’s fair for gamers to feel left behind, however. Nvidia’s most recent generation has pushed GPU prices higher than they’ve ever gone, and in Nvidia’s most recent earnings call, its data center and AI revenue was double what it made from gaming. In addition, Nvidia’s public showcases have increasingly focused on AI, with supercomputers like the DGX GH200 taking center stage.

Editors' Recommendations

- DLSS 4 could be amazing, and Nvidia needs it to be

- Nvidia’s G-Assist AI assistant actually sounds incredible

- Nvidia could flip the script on the RTX 5090

- You shouldn’t buy these Nvidia GPUs right now

- How 8GB VRAM GPUs could be made viable again