“Shocking as it sounds, the RTX 4090 is worth every penny. It's just a shame most people won't be able to afford this excellent GPU.”

- Huge leaps in 4K gaming performance

- Excellent ray tracing performance

- High power and thermals, but manageable

- DLSS 3 performance is off the charts

- Very expensive

- DLSS 3 image quality needs some work

The RTX 4090 is both a complete waste of money and the most powerful graphics card ever made. Admittedly, that makes it a difficult product to evaluate, especially considering how much the average PC gamer is looking to spend on an upgrade for their system.

Debuting Nvidia’s new Ada Lovelace architecture, the RTX 4090 has been shrouded in controversy and cited as the poster child for rising GPU prices. As much as it costs, it delivers on performance, especially with the enhancements provided by DLSS 3. Should you save your pennies and sell your car for this beast of GPU? Probably not. But it’s definitely an exciting showcase of how far this technology can really go.

Video review

Nvidia RTX 4090 specs

As mentioned, the RTX 4090 introduces Nvidia’s new Ada Lovelace architecture, as well as chipmaker TSMC’s more efficient N4 manufacturing process. Although it’s impossible to compare the RTX 4090 spec-for-spec with the previous generation, we can glean some insights into what Nvidia prioritized when designing Ada Lovelace.

The main focus: clock speeds. The RTX 3090 Ti topped out at around 1.8GHz, but the RTX 4090 showcases the efficiency of the new node with a 2.52GHz boost clock. That’s with the same board power of 450 watts, but it’s running on more cores. The RTX 3090 Ti was just shy of 11,000 CUDA cores, while the RTX 4090 offers 16,384 CUDA cores.

| RTX 4090 | RTX 3090 | |

| Architecture | Ada Lovelace | Ampere |

| Process node | TSMC N4 | 8nm Samsung |

| CUDA cores | 16,384 | 10,496 |

| Ray tracing cores | 144 3rd-gen | 82 2nd-gen |

| Tensor cores | 576 4th-gen | 328 3rd-gen |

| Base clock speed | 2235MHz | 1394MHz |

| Boost clock speed | 2520MHz | 1695MHz |

| VRAM GDDR6X | 24GB | 24GB |

| Memory speed | 21Gbps | 19.5Gbps |

| Bus width | 384-bit | 384-bit |

| TDP | 450W | 350W |

It’s hard to say how much those extra cores matter, especially for gaming. Down the stack, the 16GB RTX 4080 has a little more than half of the cores as the RTX 4090, while the 12GB RTX 4080 has even less. Clock speeds remain high, but the specs of the RTX 40-series family right now suggest that the boosted core count won’t be a major selling point, at least for gaming.

Synthetic and rendering

Before getting into the full benchmark suite, let’s take a high-level look at performance. Port Royal and Time Spy from 3D Mark show how Nvidia’s latest flagship scales well, showing a 58% gain over the RTX 3090 Ti in Time Spy, as well as a 102% increase over the RTX 3090 in Port Royal.

It’s important to note that 3DMark isn’t the best way to judge performance, as it factors in your CPU much more than most games do (especially at 4K). In the case of the RTX 4090, though, 3DMark shows the scaling well. In fact, my results from real games are actually a little higher than what this synthetic benchmark suggests, at least outside of ray tracing.

I also tested Blender to gauge some content creation tasks with the RTX 4090, and the improvements are astounding. Blender is accelerated by Nvidia’s CUDA cores, and the RTX 4090 seems particularly optimized for these types of workloads, with it putting up more than double the score of the RTX 3090 and RTX 3090 Ti in the Monster and Junkshop scenes, and just under double in the Classroom scene. AMD’s GPUs, which don’t have CUDA, aren’t even close.

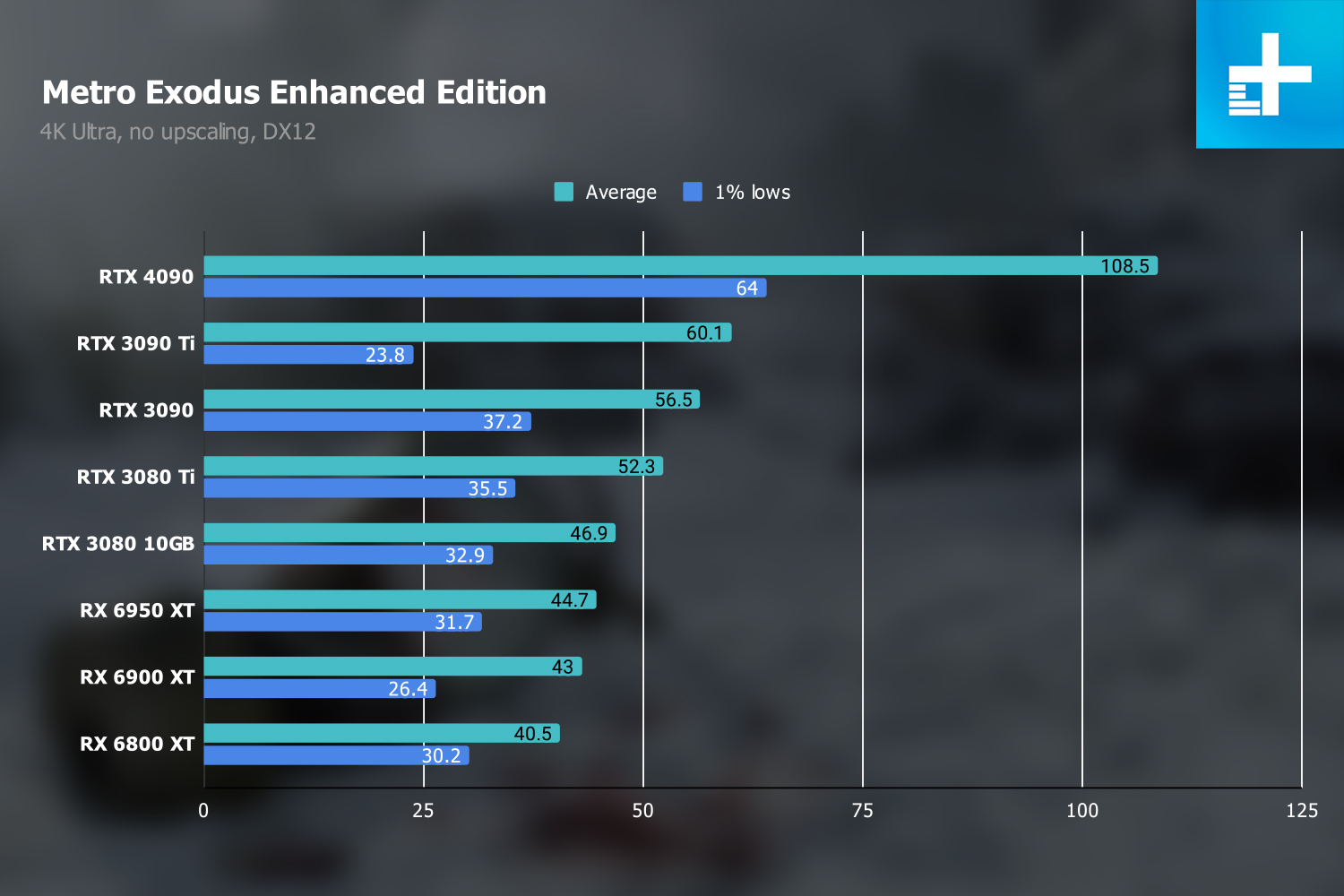

4K gaming performance

On to the juicy bits. All of my tests were done with a Ryzen 9 7950X and 32GB of DDR5-6000 memory on an open-air test bench. I kept Resizeable BAR turned on throughout testing, or in the case of AMD GPUs, Smart Access Memory.

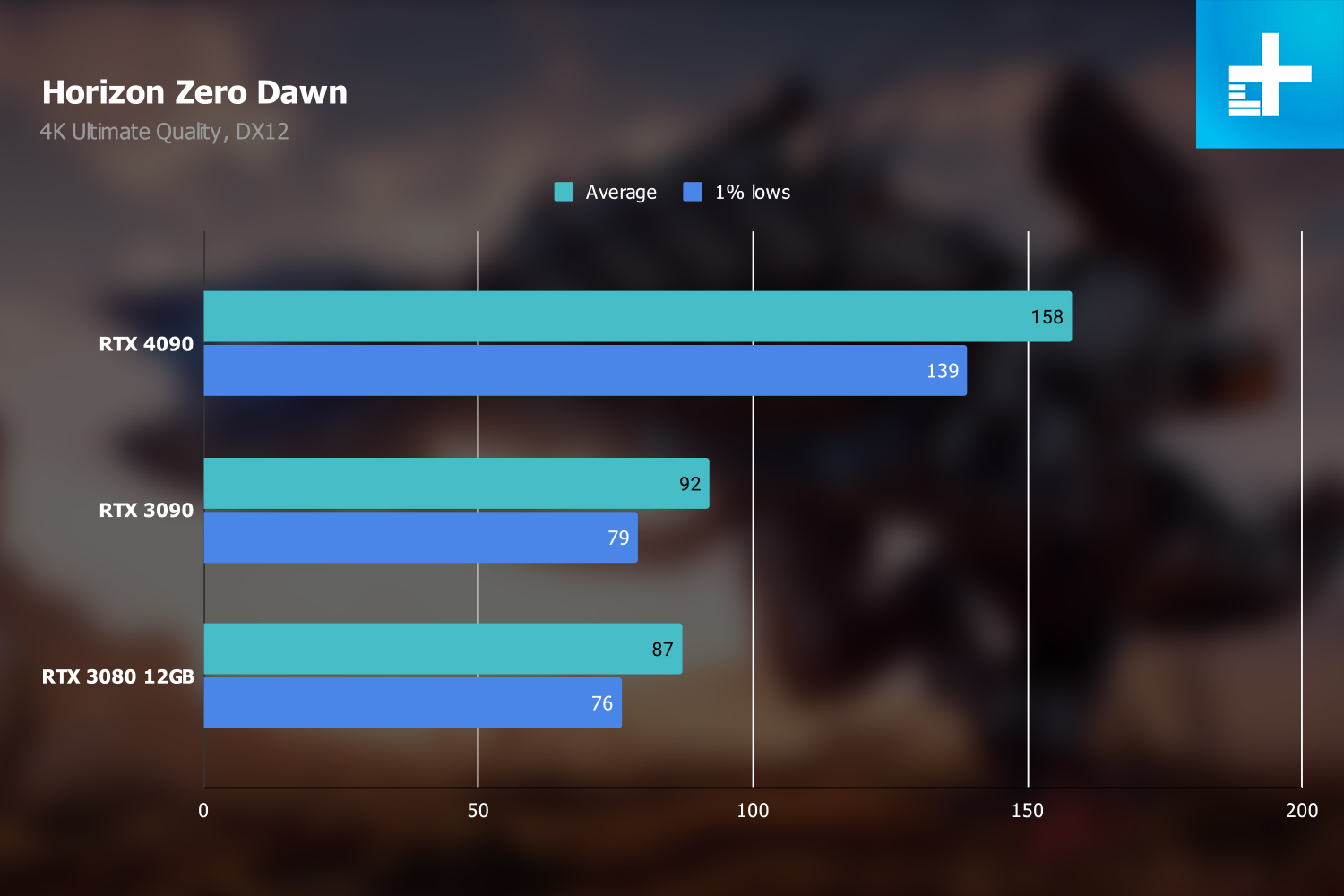

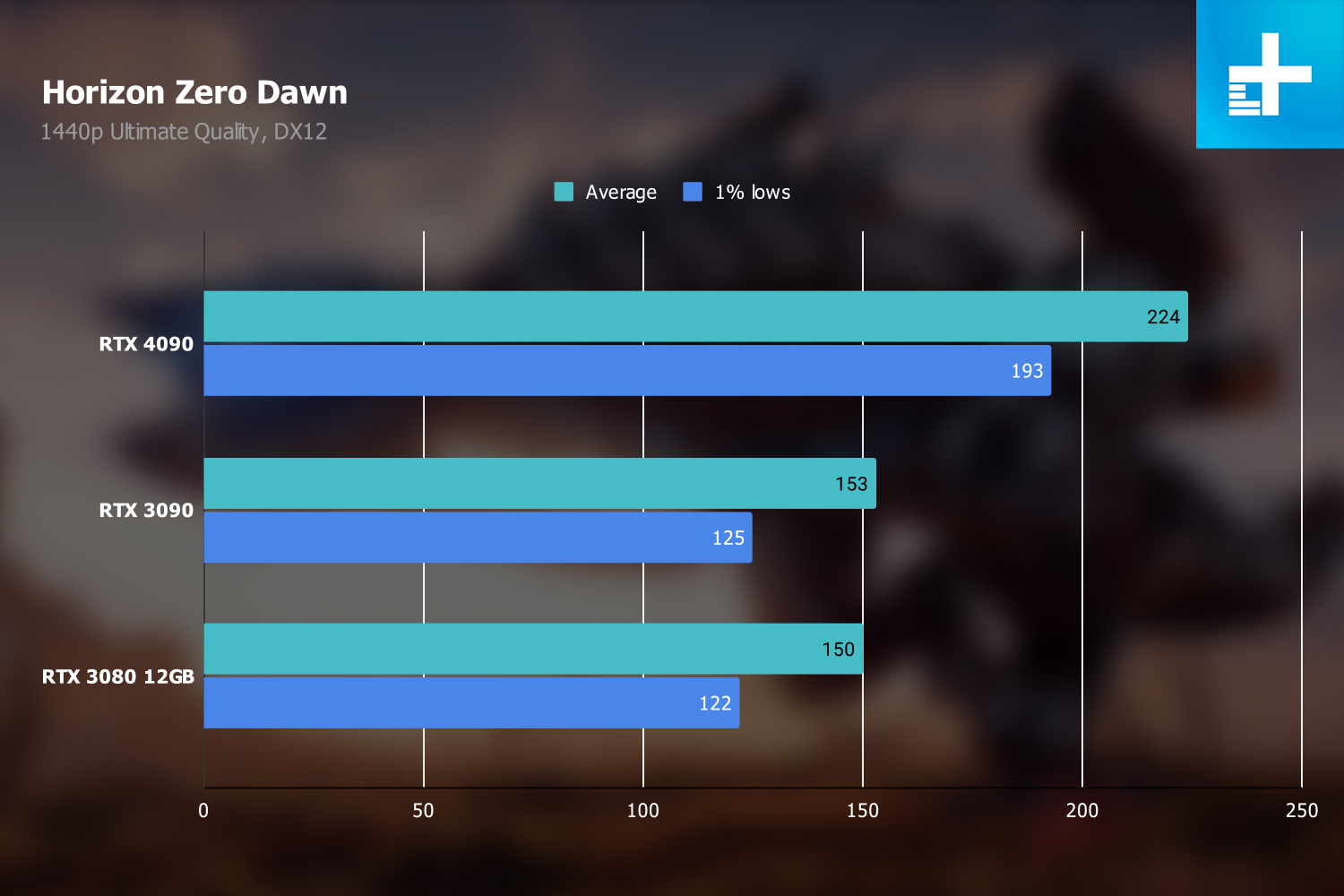

The RTX 4090 is a monster physically, but it’s also a monster when it comes to 4K gaming performance. Across my test suite, excluding Bright Memory Infinite and Horizon Zero Dawn, which I have incomplete data for, the RTX 4090 was 68% faster than the RTX 3090 Ti. Compared to the RTX 3090, you’re looking at nearly an 89% boost.

That’s a huge jump, much larger than the 30% boost we saw gen-on-gen with the release of the RTX 3080. And none of those numbers factor in upscaling. This is raw performance, including ray tracing, and the RTX 4090 is showing a huge lead over the previous generation.

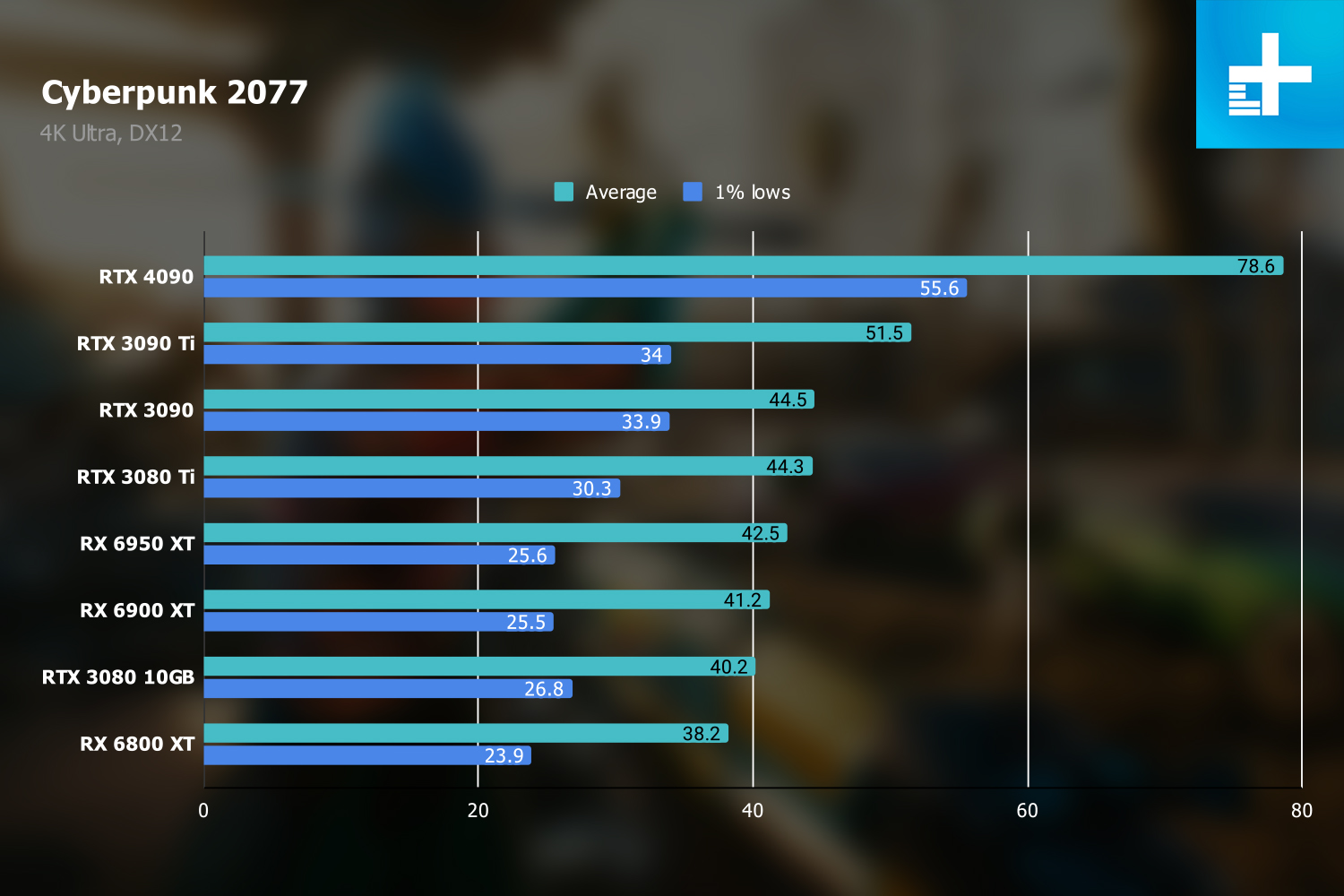

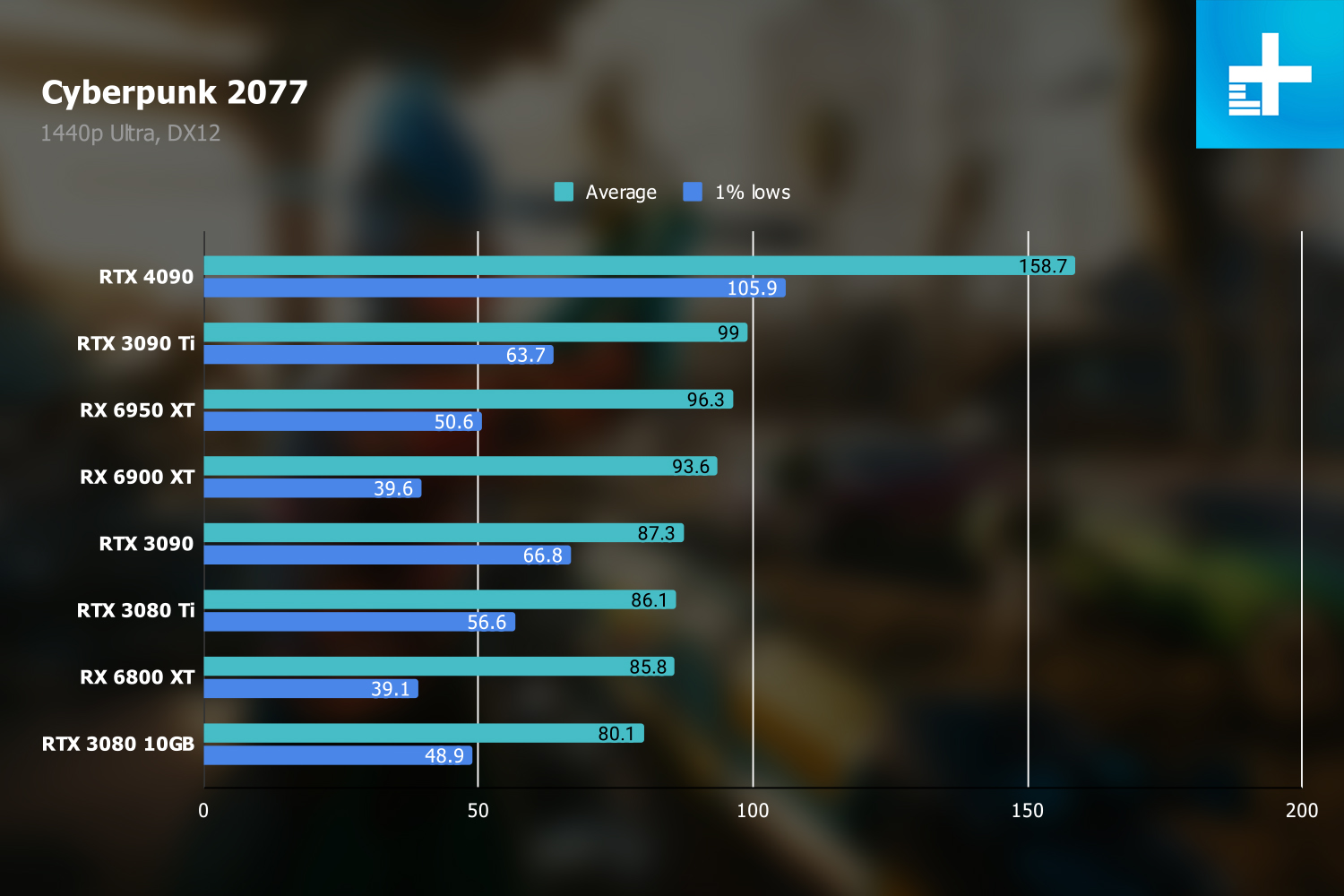

Perhaps the most impressive showing was Cyberpunk 2077. The RTX 4090 is just over 50% faster than the RTX 3090 Ti at 4K with maxed-out settings, which is impressive enough. It’s the fact that the RTX 4090 cracks 60 frames per second (fps) that stands out, though. Even the most powerful graphics cards in the previous generation couldn’t get past 60 fps without assistance from Deep Learning Super Sampling (DLSS). The RTX 4090 can break that barrier while rendering every pixel, and do so with quite a lead.

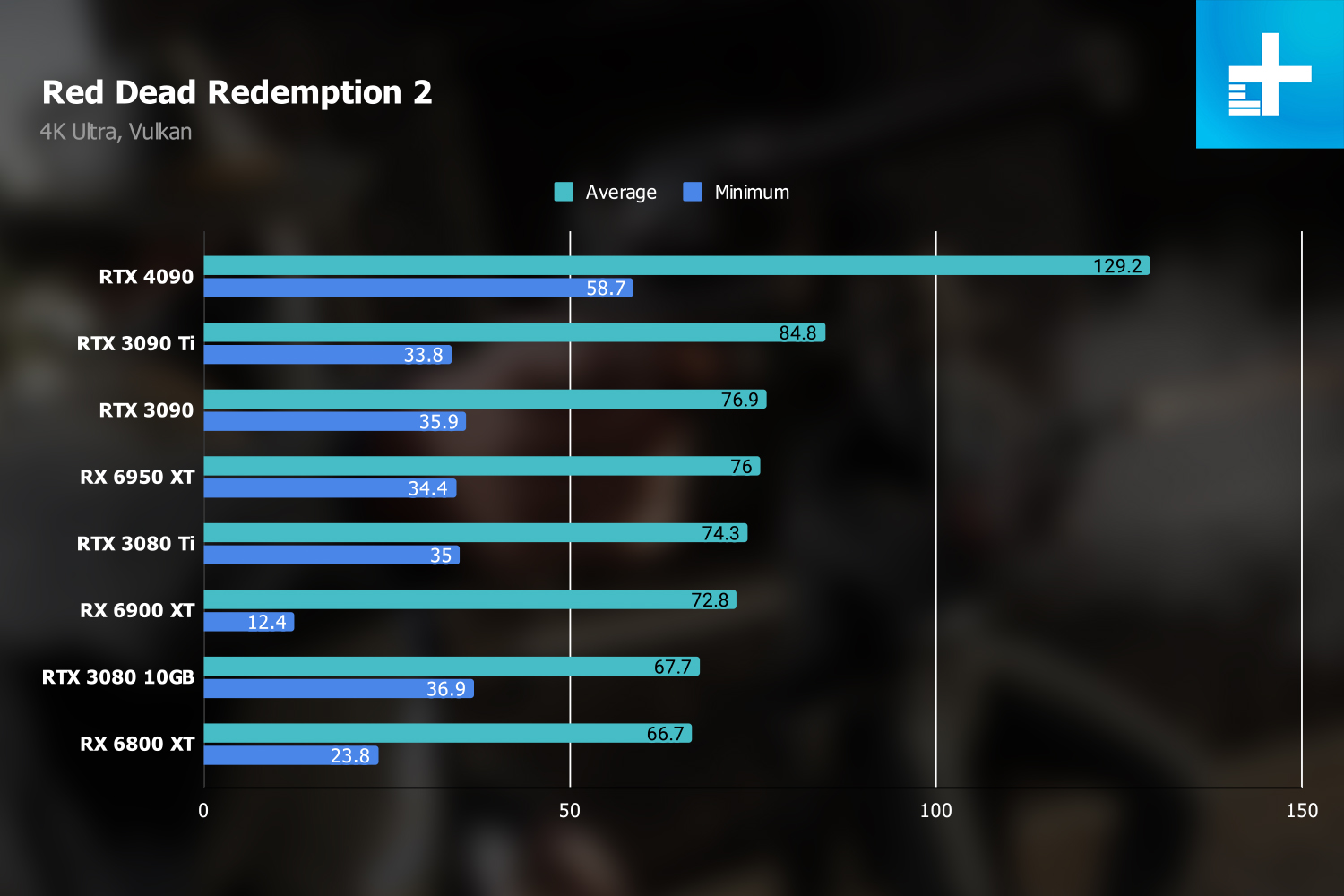

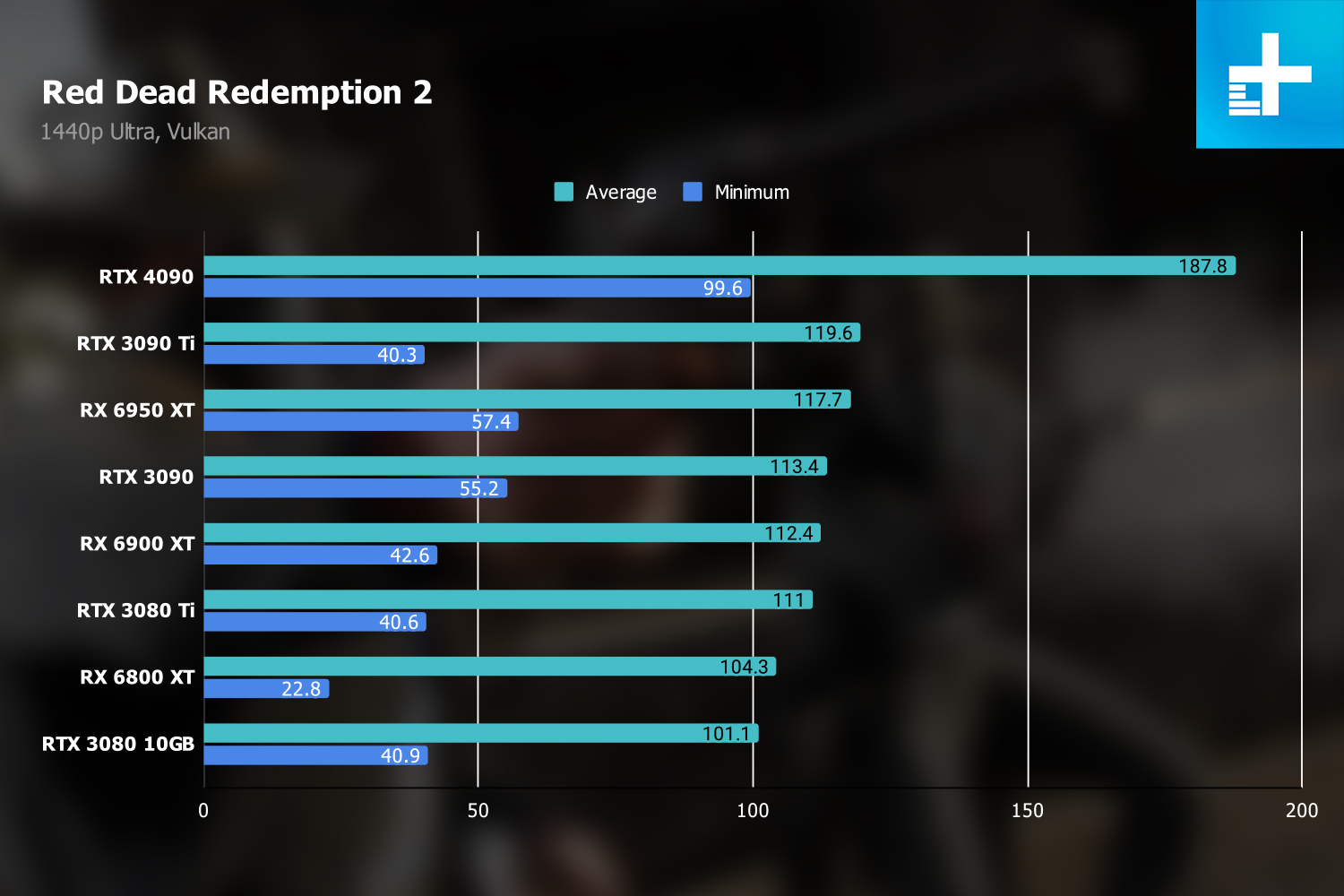

Gears Tactics also shows the RTX 4090’s power, winning out over the RTX 3090 Ti with a 73% lead. In a Vulkan title like Red Dead Redemption 2, the gains are smaller, but the RTX 4090 still managed a 52% lead based on my testing. This is a huge generational leap in performance, though it’s still below what Nvidia originally promised.

Nvidia has marketed the RTX 4090 as “two to four times faster” than the RTX 3090 Ti, and that’s not true. It’s much faster than the previous top dog, but Nvidia’s claim only makes sense when you factor in DLSS 3. DLSS 3 is impressive, and I’ll get to it later in this review. But it’s not in every game and it still needs some work. Thankfully, with the RTX 4090’s raw performance, DLSS is more of a “nice to have” and less of a “need to have.”

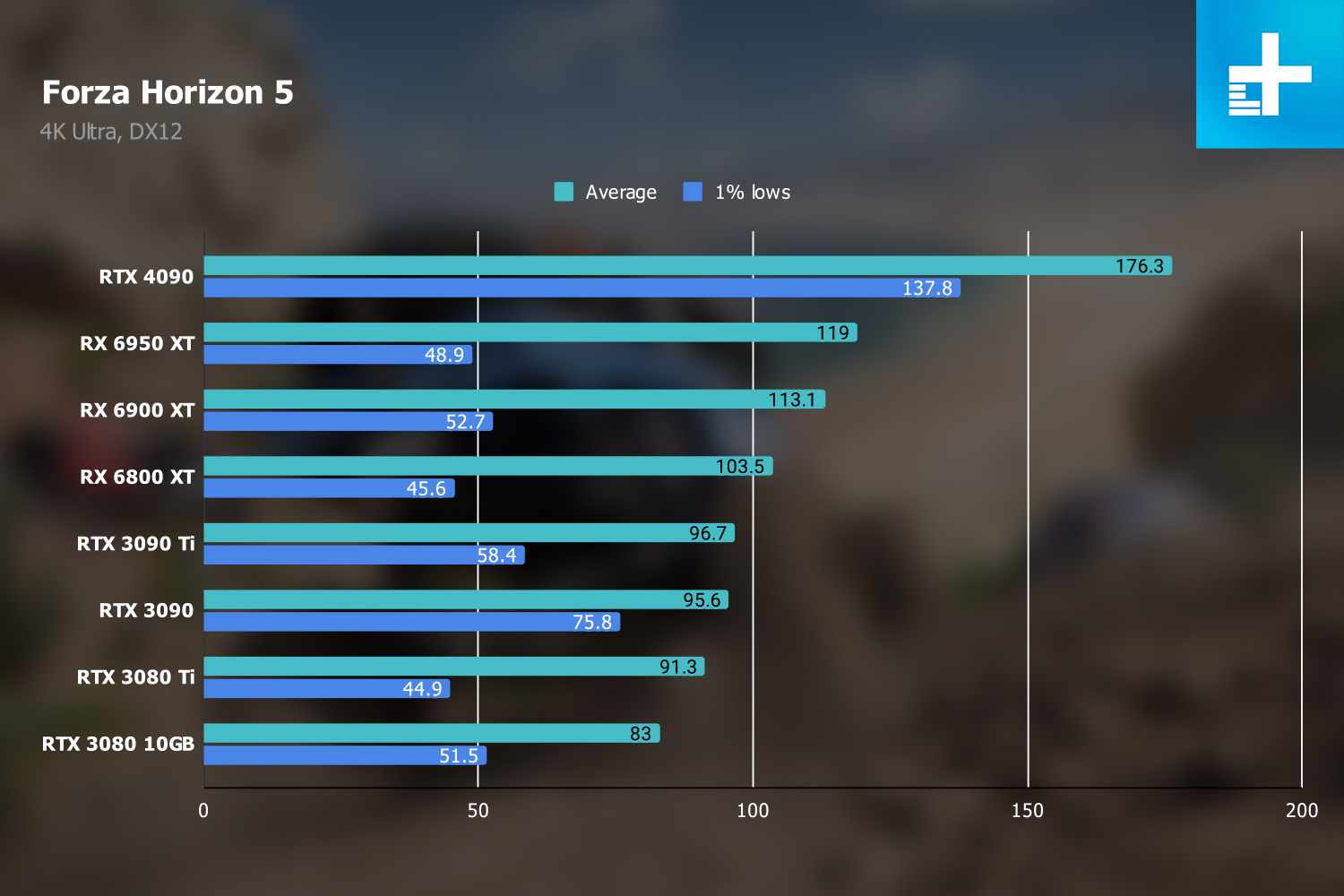

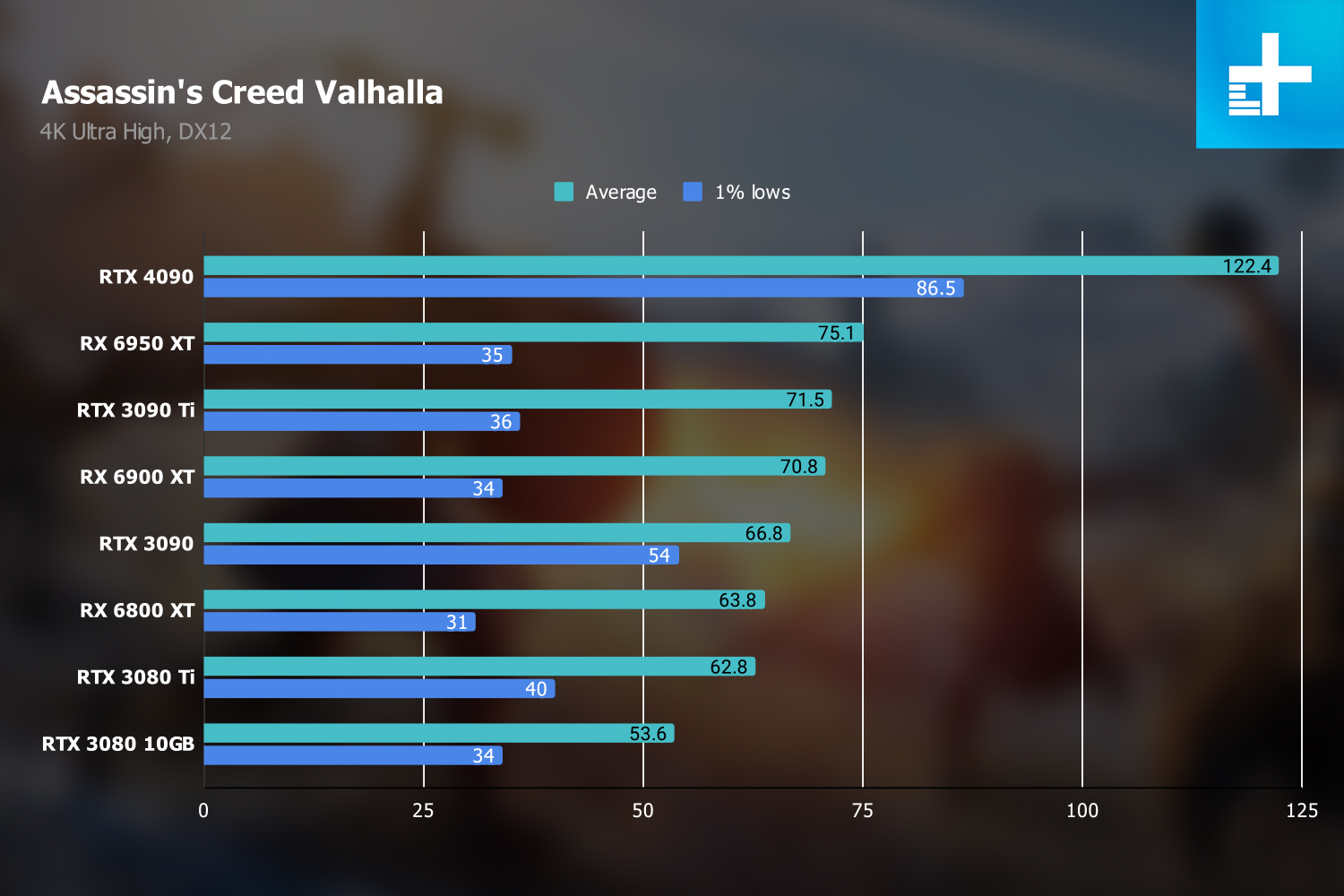

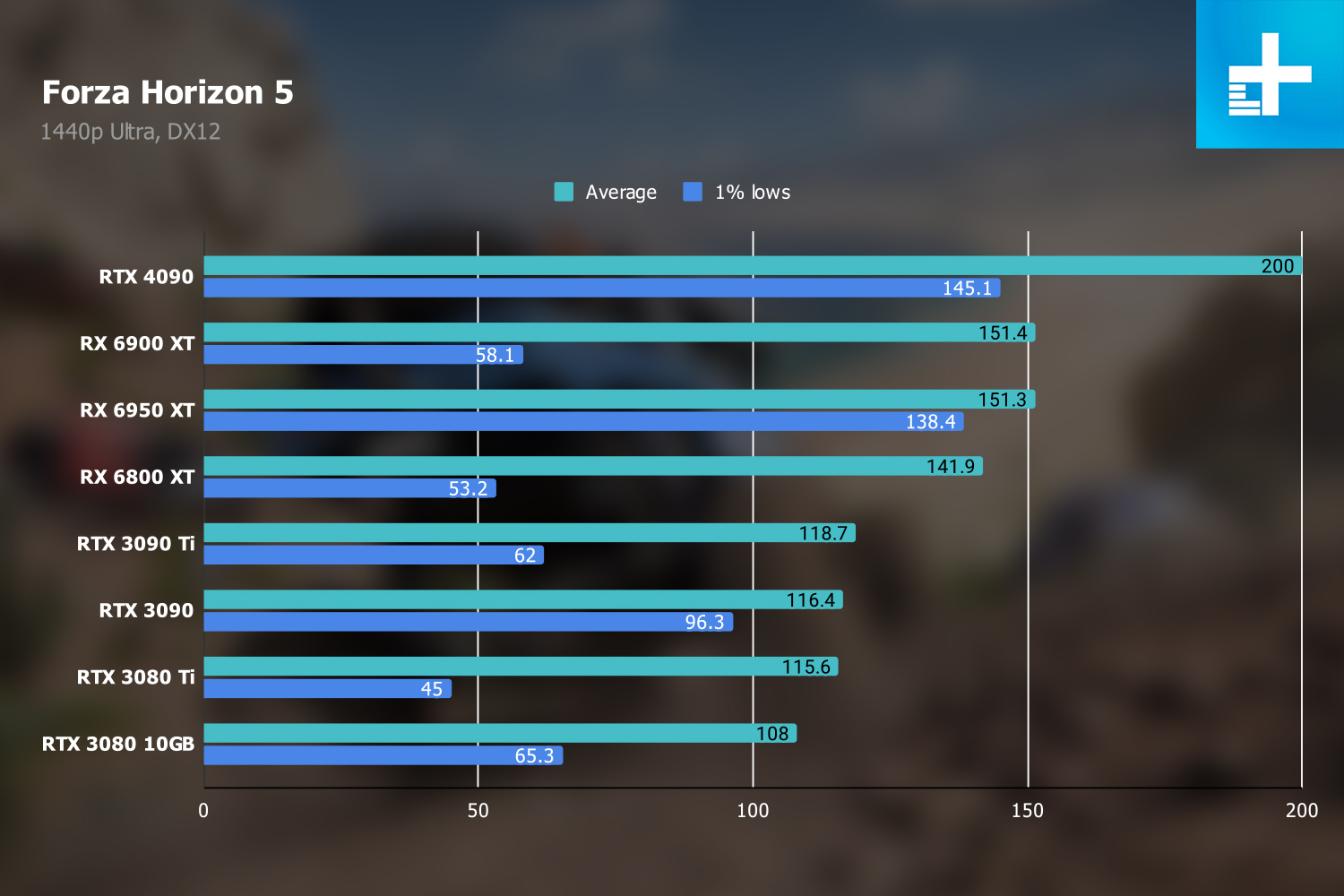

In AMD-promoted titles like Assassin’s Creed Valhalla and Forza Horizon 5, the RTX 4090 still shows its power, though now against AMD’s RX 6950 XT. In Valhalla at 4K, the RTX 4090 managed a 63% lead over the RX 6950 XT. The margins were tighter in Forza Horizon 5, which seems to scale very well with AMD’s current offerings. Even with less of a lead, though, the RTX 4090 is 48% ahead of the RX 6950 XT.

These comparisons are impressive, but the RTX 4090 isn’t on a level playing field with its competitors. At $1,600, Nvidia’s latest flagship is significantly more expensive than even the most expensive GPUs available today. With the performance the RTX 4090 is offering, though, it’s actually a better deal than a cheaper RTX 3090 or RTX 3090 Ti.

In terms of cost per frame, you’re looking at around the same price as an RTX 3080 10GB at $700. This is not the best way to judge value — it assumes you even have the extra cash to spend on the RTX 4090 in the first place, and it doesn’t account for features like DLSS 3 — but as crazy as it sounds, $1,600 is a pretty fair price for the 4K performance the RTX 4090 offers.

Now that the launch dust has settled, make sure to read our RTX 4080 review and RX 7900 XTX review to see how the RTX 4090 stacks up to other high-end GPUs.

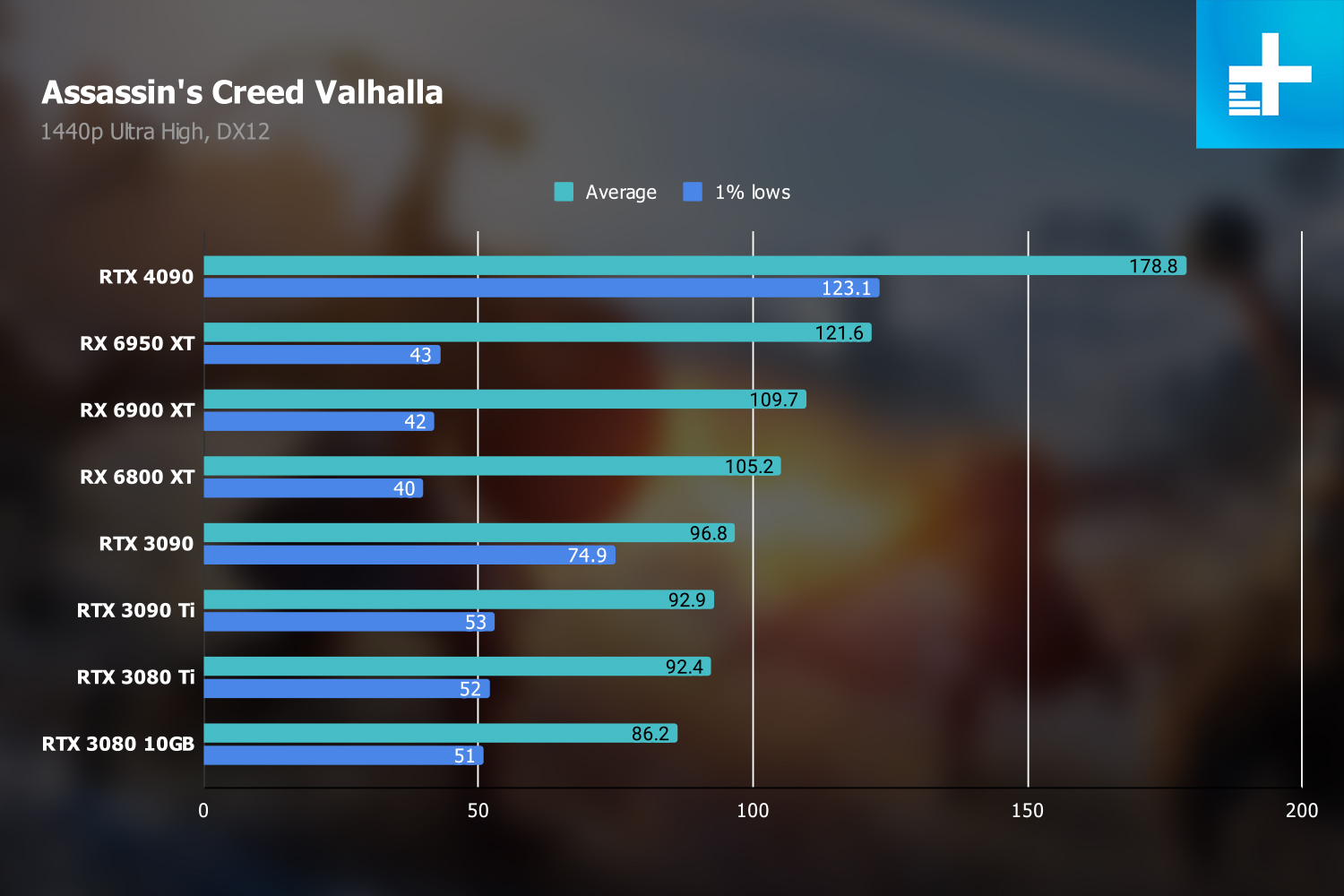

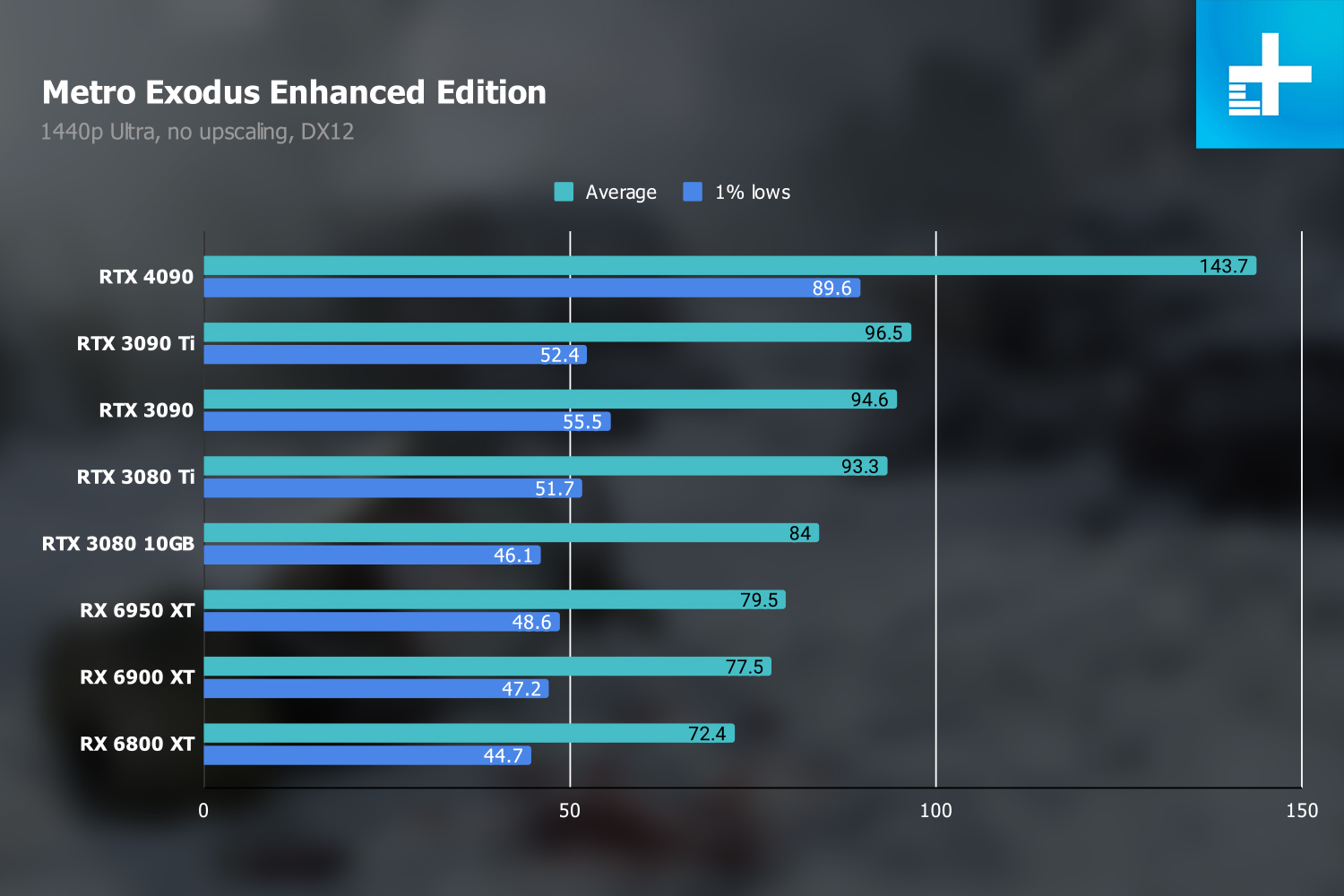

1440p gaming performance

If you’re buying the RTX 4090 for 1440p, you’re wasting your money (read our guide on the best 1440p graphics cards instead). Although it still provides a great improvement over the previous generation, the margins are much slimmer. You’re looking at a 48% bump over the RTX 3090 Ti, and a 68% increase over the RX 6950 XT. Those are still large generational jumps, but the RTX 4090 really shines at 4K.

You start to become a little CPU limited at 1440p, and if you go down to 1080p, the results are even tighter. And frankly, the extra performance at 1440p just doesn’t stand out like it does at 4K. In Gears Tactics, for example, the RTX 4090 is 36% faster than the RTX 3090 Ti, down from the 73% lead Nvidia’s latest card showed at 4K. The actual frame rates are less impressive, too. Sure, the RTX 4090 is way ahead of the RTX 3090 Ti, but it’s hard to imagine someone needs over 200 fps in Gears Tactics when a GPU that’s $500 cheaper is already above 160 fps.

At 4K, the RTX 4090 accomplishes major milestones — above 60 fps in Cyberpunk 2077 without DLSS, near the 144Hz mark for high refresh rate monitors in Assassin’s Creed Valhalla, etc. At 1440p, the RTX 4090 certainly has a higher number, but that number is a lot more impressive on paper than it is on an actual screen.

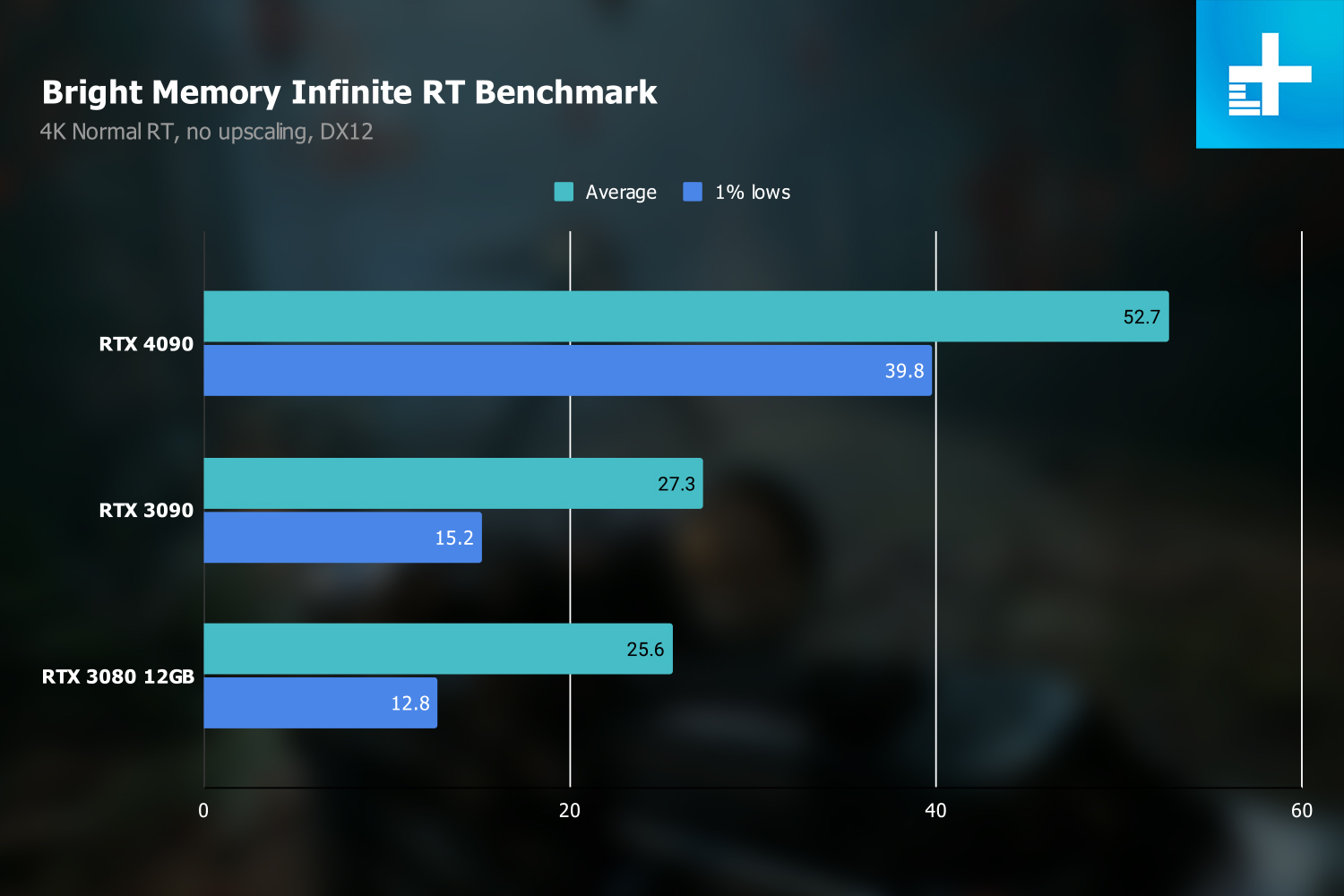

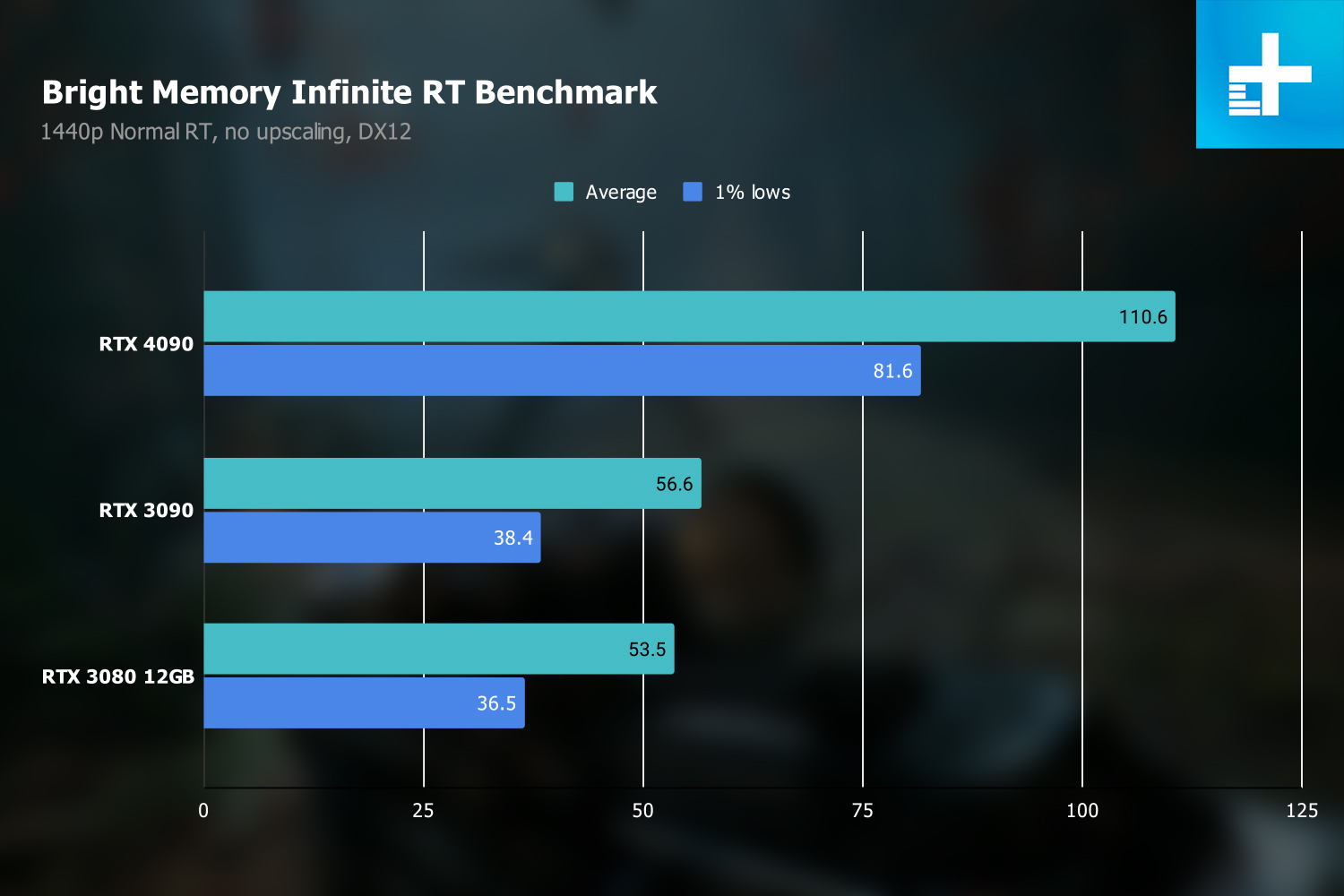

Ray tracing

Nvidia has been a champion of ray tracing since the Turing generation, but Ada Lovelace is the first generation where it’s seeing a major overhaul. At the heart of the RTX 4090 is a redesigned ray tracing core that boosts performance and introduces Shader Execution Reordering (SER). SER is basically a more efficient way to process ray tracing operations, allowing them to execute as GPU power becomes available rather than in a straight line where bottlenecks are bound to occur. It also requires you to turn on hardware-accelerated GPU scheduling in Windows.

And it works. The margins with ray tracing are much slimmer usually, but the RTX 4090 actually shows higher gains with ray tracing turned on. In Cyberpunk 2077, for example, the RTX 4090 is nearly 71% faster than the RTX 3090 Ti with the Ultra RT preset. And that’s before factoring in DLSS. AMD’s GPUs, which are much further behind in ray tracing performance, show even larger differences. The RTX 4090 is a full 152% faster than the RX 6950 XT in this benchmark.

Similarly, Metro Exodus Enhanced Edition showed an 80% boost for the RTX 4090 over the RTX 3090 Ti, and Bright Memory Infinite showed the RTX 4090 93% ahead of the RTX 3090. Nvidia’s claim of “two to four times faster” than the RTX 3090 Ti may not hold up without DLSS 3, but ray tracing performance gets much closer to that mark.

And just like 4K performance, the RTX 4090 shows performance improvements that actually make a difference when ray tracing is turned on. In Bright Memory Infinite, the RTX 4090 is the difference between taking advantage of a high refresh rate and barely cracking 60 fps. And in Cyberpunk 2077, the RTX 4090 is literally the difference between playable and unplayable.

DLSS 3 tested

DLSS has been a superstar feature for RTX GPUs for the past few generations, but DLSS 3 is a major shift for the tech. It introduces optical flow AI frame generation, which boils down to the AI model generating a completely unique frame every other frame. Theoretically, that means even a game that’s 100% limited by the CPU and wouldn’t see any benefit from a lower resolution will have twice the performance.

That’s not quite the situation in the real world, but DLSS 3 is still very impressive. I started with 3DMark’s DLSS 3 test, which just runs the Port Royal benchmark with DLSS off and then on. My goal was to push the feature as far as possible, so I set DLSS to its Ultra Performance mode and the resolution to 8K. This is the best showcase of what DLSS 3 is capable of, with the tech boosting the frame rate by 578%. That’s insane.

In real games, the gains aren’t as stark, but DLSS 3 is still impressive. Nvidia provided an early build of A Plague Tale: Requiem, and DLSS managed to boost the average frame rate by 128% at 4K with the settings maxed out. And this was with the Auto mode for DLSS. With more aggressive image quality presets, the gains are even higher.

A Plague Tale: Requiem exposes an important aspect of DLSS 3, though: It incurs a decent amount of overhead. DLSS 3 is two parts. The first part is DLSS Super Resolution, which is the same DLSS you’ve seen on previous RTX generations. It will continue to work with RTX 20-series and 30-series GPUs, so you can still use DLSS 3 Super Resolution in games with previous-gen cards.

DLSS Frame Generation is the second part, and it’s exclusive to RTX 40-series GPUs. The AI generates a new frame every other frame, but that’s computationally expensive. Because of that, Nvidia Reflex is forced on whenever you turn on Frame Generation, and you can’t turn it off.

If you reason through how Frame Generation works, it should provide double the frame rate of whatever you’re getting with just Super Resolution, but that’s not the case. As you can see in Cyberpunk 2077 below, the Frame Generation result means that the GPU is only rendering about 65 frames — the rest are coming from the AI. With Super Resolution on its own, that result jumps up by nearly 30 fps. That’s the DLSS Frame Generation overhead at play.

Obviousy, Frame Generation provides the best performance, but don’t count out Super Resolution as obsolete. Although it would seem that Frame Generation doubles DLSS frame rates, it’s actually much closer to Super Resolution on its own in practice.

You can’t talk about DLSS apart from image quality, and although DLSS 3 is impressive, it still needs some work in the image quality department. Because every other frame is generated on the GPU and sent straight out to your display, it can’t bypass elements like your HUD. Those are part of the generated frame, and they’re ripe for artifacts, as you can see in Cyberpunk 2077 below. The moving quest marker sputters out as it moves across the screen, with the AI model not quite sure where to place pixels as the element moves. Normally, HUD elements aren’t a part of DLSS, but Frame Generation means you have to factor them in.

That same behavior shows up in the actual scene, as well. In A Plague Tale: Requiem, for example, you can see how running through the grass produces a thin layer of pixel purgatory as the AI struggles to figure out where to place the grass and where to place the legs. Similarly, Port Royal showed soft edges and a lot of pixel instability.

These artifacts are best seen in motion, so I captured a bunch of 4K footage at 120 fps, which you can watch below. I slowed down the DLSS comparisons by 50% so you can see as many frames as possible, but keep in mind YouTube’s compression and the fact that it’s difficult to get a true apple-to-apples quality comparison when capturing gameplay. It’s best to see in the flesh.

While playing, the image quality penalties DLSS 3 incurs are easily offset by the performance gains it offers. But Frame Generation isn’t a setting you should always turn on. It’s at its best when you’re pushing ray tracing and all of the visual bells and whistles. Hopefully ,it will improve, as well. I’m confident Nvidia will continue to refine the Frame Generation aspect, but at the moment, it still shows some frayed edges.

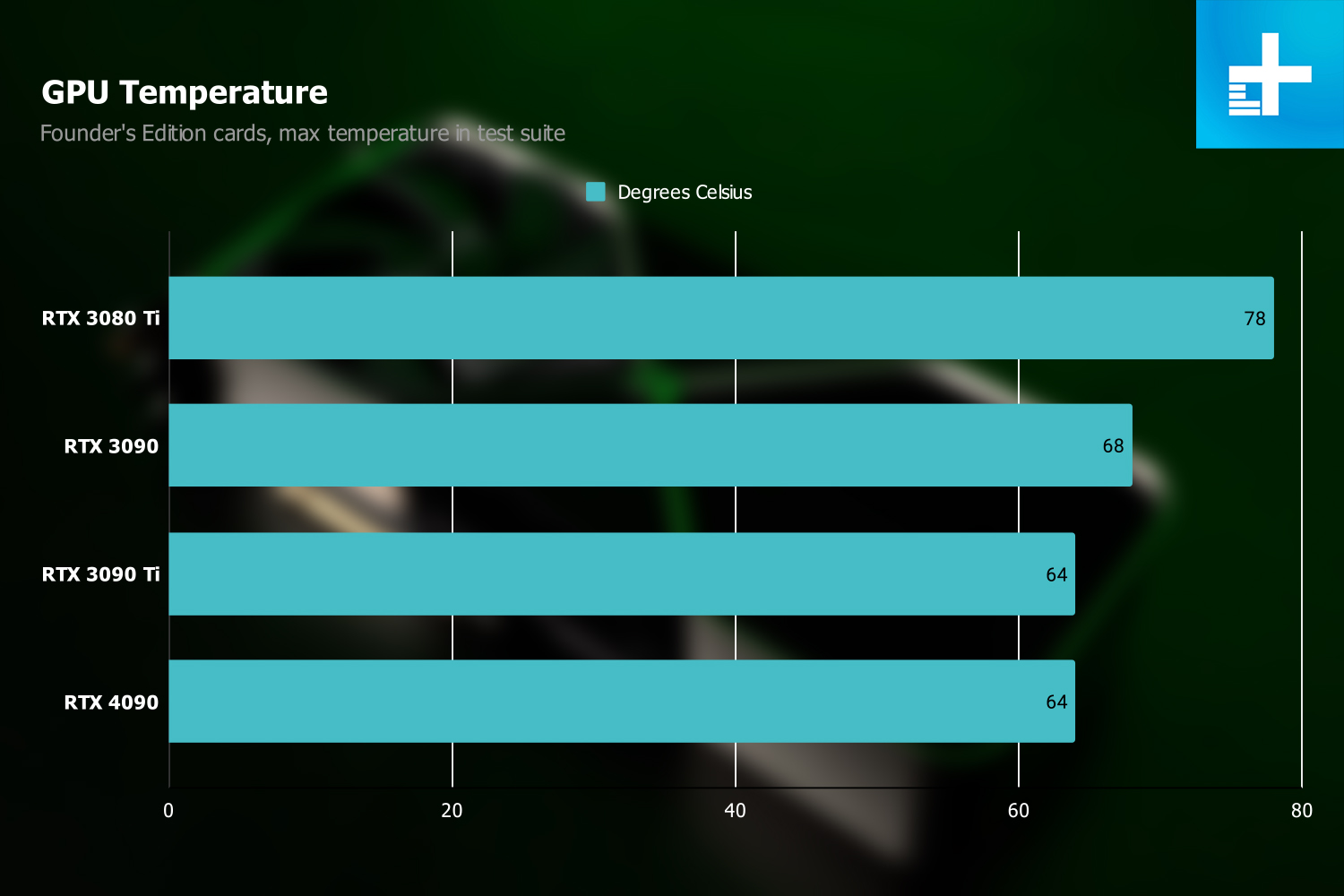

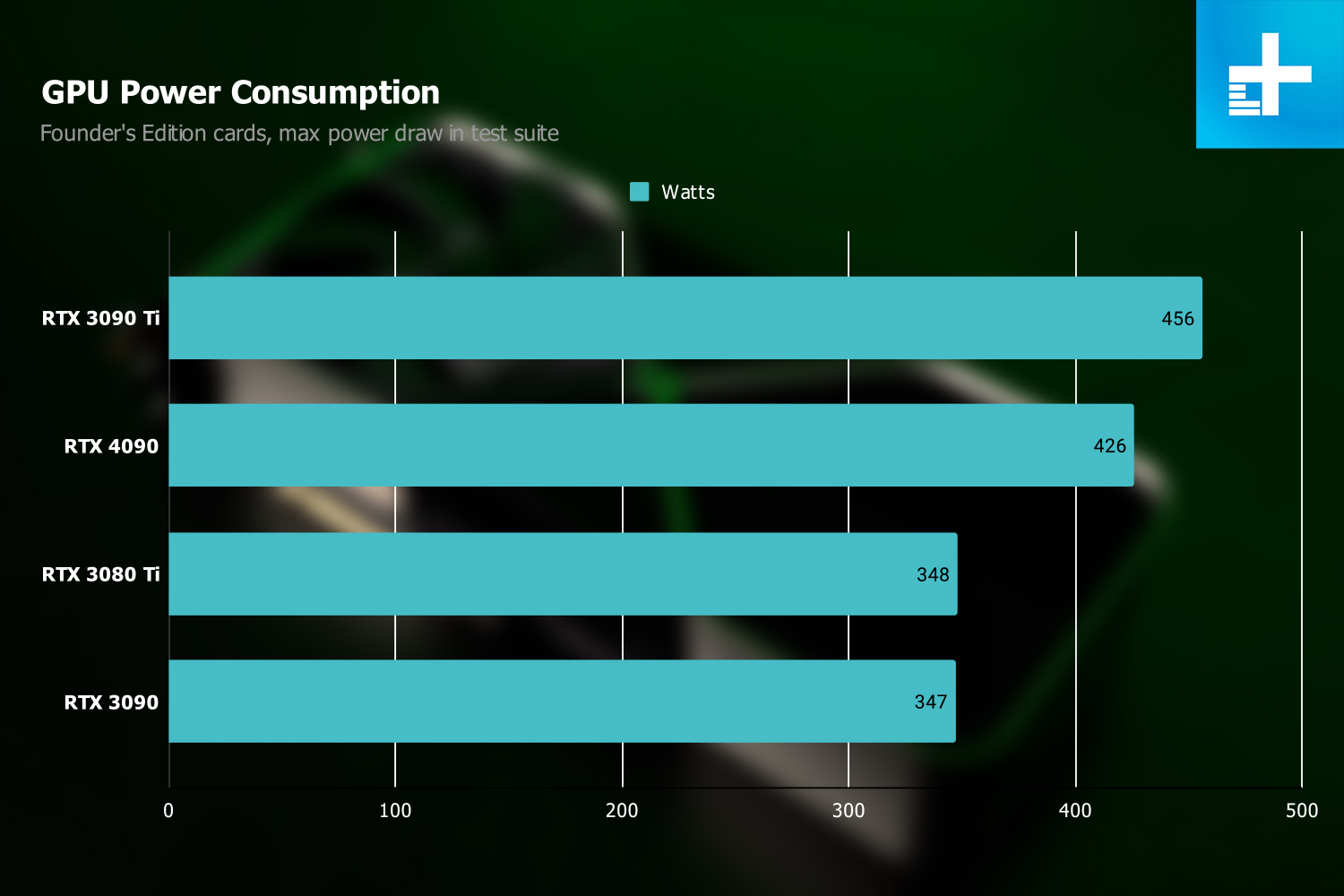

Power and thermals

Leading up to the RTX 4090 announcement, the rumor mill ran rampant with speculation about obscene power demands. The RTX 4090 draws a lot of power — 450W for the Founder’s Edition and even more for board partner cards like the Asus ROG Strix RTX 4090 — but it’s not any more than the RTX 3090 Ti drew. And based on my testing, the RTX 4090 actually draws a little less.

The chart below shows the maximum power draw I measured while testing. This isn’t the max power — a dedicated stress test would push the RTX 4090 further — but games aren’t stress tests, and you won’t always reach max power (or even get close). Comparing other Founder’s Edition models, the RTX 4090 actually consumed about 25W less than the RTX 3090 Ti. Overclocked board partner cards will climb higher, though, so keep that in mind.

For thermals, the RTX 4090 peaked at 64 degrees Celsius in my test suite, which is right around where it should sit. The smaller RTX 3080 Ti with its pushed clock speeds and core counts showed the highest thermal results, peaking at 78 degrees. All of these numbers were gathered on an open-air test bench, though, so temperatures will be higher once the RTX 4090 is in a case.

Should you buy the Nvidia RTX 4090?

If you have $1,600 to spend on a GPU, yes, you should buy the RTX 4090. Most people don’t have $1,600 to spend on a graphics card, though, which is where the RTX 4090 gets tricky.

I can show chart after chart showing how powerful the RTX 4090 is, how $1,600 is a relatively fair price to ask, and how DLSS 3 massively improves gaming performance. But the fact remains that the RTX 4090 costs more than many full gaming PCs do. This is far from the graphics card for most people. It’s not even the graphics card for most enthusiasts.

The RTX 4090 is worth it, but that doesn’t mean you should buy it. We still have a very incomplete picture of the next generation — the RTX 4080 models are arriving in November, and AMD is set to launch its RX 7000 GPUs on November 3. For most people, the best option is to wait. We’re not headed for another GPU shortage, so there’s no reason to believe the RTX 4090 will become more expensive once these cards are out.

If you’re in the slim percentage of people that want the best simply because it’s the best, the RTX 4090 is firmly holding that title. The RTX 4090 is much more expensive than the average PC gamer is looking to spend, but then again, it’s much more than an average graphics card.