Looking at the new Lily Camera, you’ll spot an immediate resemblance to various consumer quadcopter drones that are already available. But its creator, Antoine Balaresque, purposely avoids calling it a quadcopter or a drone, because those terms, he says, are used to describe something that requires an operator to control and meant to be dispatched far away. Lily, however, is the world’s first autonomous, smart “flying camera” that doesn’t rely on any human intervention, as it uses computer vision technology and GPS to track its user.

“We designed it as a camera, and never thought of it as a drone or quadcopter,” Balaresque says. “Think of it as your own personal cameraman.”

“Think of it as your own personal cameraman.”

And it’s that idea of a “personal cameraman” that initiated the creation for this robot. After reviewing photos from a vacation he took with his family, Balaresque noticed that his mother was missing from many of them, as she was behind the camera. What if he could create a flying camera that would hover around them, capturing photos and videos at the same time?

It sounds like science fiction, but that’s what he and cofounder Henry Bradlow did. Using their computer science background from the University of California, Berkeley, as well as their experience in the U.C. Berkley Robotics Laboratory and participation in “hackathon” events, the two created Lily, a five-man robotics company based in Menlo Park, California. The Lily Camera, a project that started two years ago, will be its first flagship product.

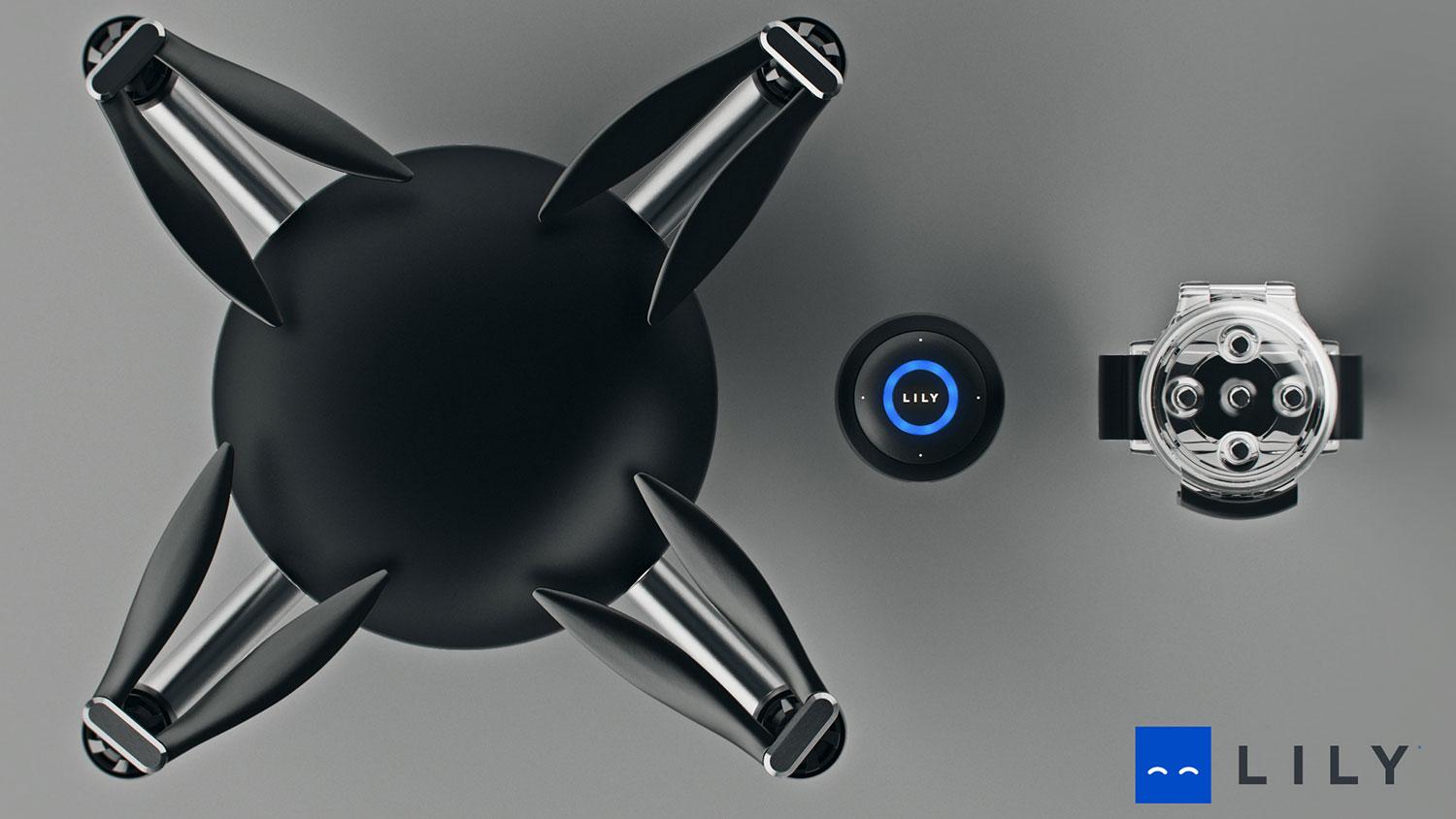

Rugged and waterproof, the Lily Camera is constructed using mainly off-the-shelf parts. It uses Sony’s IMX117 1/2.3-inch image sensor – the same as the one in GoPro’s Hero3+ Black – to shoot 12-megapixel stills and Full HD 1080p videos at 60 frames per second, with digital image stabilization. At nearly 3 pounds, the Lily Camera feels heavy, but that’s due in part to the rugged design and the internal sensors needed for autonomous operation, which includes an accelerometer, three-axis gyro, barometer, GPS, and front- and bottom-facing cameras. Balaresque says it’s normally difficult for startups to acquire the high-end parts like those in the Lily Camera, but it helps when the company’s advisers include a former GoPro brand manager and Dropcam exec.

The Lily Camera is an early example of what’s to come: Autonomous flying cameras will get smaller.

But it’s the technology that controls the Lily Camera that’s fascinating. Although the user never has to control the robot, he or she does need to hold or wear a small tracking device that relays the user’s position, distance, and speed (via Wi-Fi). In conjunction with its onboard sensors and computer-vision algorithms, the tracker determines how close or high to fly, as well as where to follow and circle the user. And because it knows the user’s exact positioning, the Lily Camera can take off and land on the user’s hand.

For safety, the Lily Camera has a maximum speed of 25 miles per hour, won’t fly higher than 50 feet or more than 100 feet away from the user, and, using its camera sensors, it won’t ever get too close to objects or subjects (there’s a 5-foot minimum distance). While it operates autonomously, the user can tell it where to be exactly (to the side, or circle 360 degrees) and zoom in or out, using the tracking device. In case it goes out of range or the battery gets low, it has a failsafe-landing feature. There’s also a kill-switch on the remote, if that ever becomes necessary.

The Lily Camera will also come with companion apps for either iOS or Android. Using a smartphone gives the user a live view of what Lily is recording, as well as other basic adjustments and the ability to create flight paths (you still can’t fly the Lily Camera, as that would defeat its purpose). Balaresque says the firmware upgradeable, which could give the robot new features in the future.

Using the Lily Camera is simple. Blue lights, which resemble a smiley face (too cute!), indicate it’s on. You can throw the unit into the air and it would take off, but during a demo Balaresque gave us, using a very early, crude prototype that’s completely 3D-printed, you can have Lily Camera take off and land from your hand. Now, it does seem a bit dangerous to have those fast-whirling propellers spinning near your hand, but we are happy to report that we escaped unscathed. With the tracker device in-hand, the prototype Lily Camera circled around Balaresque and never drifted away.

There were a few hiccups during the demonstration, but it’s still early days: the production unit won’t be available until February 2016. But Balaresque wants to show off Lily Camera’s autonomous capabilities, and from what we saw, it works.

Lily Camera will come completely set up, and is ready to use out of the box. Balaresque says he wanted the Lily Camera to be ready to fly and shoot when you want it to, without spending time putting it together. The whole thing fits inside a standard backpack, which is how Balaresque hauls it around.

As for shooting videos, the Lily Camera will have some interesting features. The tracking device also doubles as an audio recorder. This allows it to shoot a video while simultaneously recording your audio, even if it’s several feet away from you. With the sensors, the Lily Camera also knows when there’s a sudden change in activity. Say, for example, you’re riding on a skateboard, and along the way you perform a trick. The camera will detect that change and record the info, which you can use later when you’re editing and looking for highlights (it’s similar to a feature in the new Sony 4K Action Cam). And because it’s tracking GPS data, you can overlay the information over a recorded video too.

Photos and videos can be recording onto the 4GB internal memory, or a removable SD card. Unfortunately, like most quadcopters, the Lily Camera only has a flying time of 20 minutes.

Drones like DJI’s Phantom 2 Vision incorporate some sort of autonomous feature, but Balaresque says those still require some type of human activity and GPS waypoints. His device is designed to be completely autonomous. Over time, it can learn more about the user, like recognizing the colors of clothing. But the Lily Camera is also an early example of what’s to come: With miniaturization, Balaresque says flying cameras will get smaller and the cameras will get better, and the computer vision technology will get even smarter.

If you want to be one of the first to experience the Lily Camera, the company is taking preorders for $499; when it starts shipping, it will have a retail price of $999. Balaresque says this isn’t fundraiser, but more of an awareness campaign, as it has already lined up production. It’s too early for us to say how well the Lily Camera will work, as we’ve only seen a prototype with a low-quality VGA camera, but the final product should have a camera comparable to higher-end action cams. If it flies better than what Balaresque showed us, the Lily Camera could bring in a new way for consumers to shoot their photos and videos.